Google Street View hit Japan

During Novell's Hack Week I started a project I was interested around moonlight, but haven't really done it. I'll revisit it once I got one example running (so, no example runs fine yet).

Recently Google Street View has launched in Japan this Summer, and it caused a lot of flamatory and bashing against Google. Details can be read at GlobalVoicesOnline ([*1] and [*2]). Similar arguments occured in France, England, Canada etc., and the U.S (I think, everywhere).

I'm not to explain all about the arguments, but to just update my recent status. Read those articles above if you are interested.

As one of the board members of MIAU, I was privately busy for preparing a symposium on Google's Street View, to discuss it publicly, for about 60 attendees. (Japanese news links: [*1], [*2] and [*3])

Apart from the organization which stands at neutral position, I keep (blogging about it (in Japanese) mostly raising caution that people should calm down, point problems precisely in precise context and distinguish issues and nonissues for each subject people raised, so that we do not have to shut down any kind of web service deployments unnecessarily. Even standing on such prudent position, it's been very hard to correct those furious people. I am also defamed by a lot of people including Anonymous Cowards in slashdot.jp for posting fair (in my belief) evaluation on those opinions. (Like "If there's risk of some property rights then it should be evaluated in an evenhanded fashion" => "F you")

It's not an easy bug to get resolved.

rejaw

Yesterday friends of mine have released Rejaw, a microblogging-like, Comet-based threaded web chat infrastructure. It is with a full set of API. The introduction can be read here. I was one of the alpha testing users.

There are already some articles introducing Rejaw:

- Rejaw is Not Just Another Twitter Clone (mashable)

- Rejaw: Combining Microblogging + Chat (readwriteweb)

interesting article on how MySpace and Facebook are failing in Japan

This article mostly explains correctly why MySpace and Facebook are not accepted by Japanese.

joining SNS by real name regarded as dangerous

Yes, when Facebook people visited Japan and explained their strategy to expand their market in Japan, by advertising "trusted by real name" network, we found it mostly funky. As the article explained, it is already achieved by mixi. And by that time, mixi is already regarded as dangerous "by exposing real name too widely".

One of the example accidents happened in 2005 August, at the comic market. Comic market place is usually flooded by terrible numbers of otaku people, and they used to be looking bad in general. One of a part time student workers at a hot dog stand wrote an entry in "mixi diary" like: "there was a lot of ugly otaku people there. eek!"

While it is pretty much straightforward, those otaku guys got hurt (at least some of them loudly claimed so), upset and started "profiling" who is that student. It was very easy in mixi, because mixi at that time encouraged to put real-life information with real name. No sooner she was then flooded by a lot of blaming voices, she disappeared from mixi.

OK, she was too careless, or ideally she should not write it (it is always easy to say something ideal). But she was not a geek and does not really understand how the network (mixi) is "open" to others (it is not really "open" by invitation filters, but as mixi grew up to have millions of users, it is of course not "trusted network" anymore). She didn't blame a specific person, and hadn't felt guilty until the company forced her to apologize. This kind of "careless" accidents has kept happening in mixi and it became a social problem.

Nowadays we have the same issue around "zenryaku-prof", where not a few children has faced troubles (for example sexual advances) by the face that the network is "open" to the web by default, while they think it isn't.

Though there must have been similar incidents outside Japan too (for example people fired by his or her blogs), the above (I believe) is the general understanding of the situation in Japan.

Mobile web madness

Another obvious point for Japanese, but would not for else, is that Japanese mobile web support is more important than anything, to get more people joined. Mixi is of course accessible from our cell phones. Even more funky example is "mobage-town", which used to limit access only from cell phones(!). (It is done by sending "contract ID", which is terrible BTW.) Mobage-town is one of the mega hit site in Japanese mobile web. It is mostly for games on the cell phones, but also has a huge SNS inside. It is also funky that the network used to be mostly filled by under-20 children. (Now children grew up to above 20, so the number is not obvious.)

Typically Japanese people spend a lot of boring time, between home and their offices or schools, on trains or buses. They can only do some limited "interesting" stuff. It used to be readings for example, and nowdays it is the mobile web.

Twitter was very successful unlike those failing players. Though I don't think the explanation on the TechCrunch article is right. Twitter had spread by "movatwitter", which is designed as the mobile web UI (and twitter is fully accessible by the API) as well as some additional values such as on-the-fly photo uploader (like Gyazickr for iPhone). It also filled our need (microblogging is a very good way to fill our boring time during our daily move). It lived very well in the mobile web land: no Javascript, no applets, no requirements for huge memory allocation.

When facebook is advertised with its API, what came to my mind was: "Is it even possible to make it for Japanese mobile web? nah"

While we, as a member of "open" world wide web, do not really like this mobile-only web (probably we should read Jonathan Zittrain), it is not a trivial demand that a cell phone is accessible to the mobile-only network. For example, iPhone 3G does not support it (iPhone BTW lacks a lot of features that typical Japanese people expect: for example, camera shake adjuster, it does not provide mobile TV capability, the mobile wallet etc.). It is often referred as "Galapagos network", which is intended as failing to expand their businesses abroad (one of the commentor on the TechCrunch entry mentions it. It is even funny that those iPhone enthusiasts try to claim that their web applications are "open" (as compared to Japanese mobile-only network).

BTW a commentor on the TechCrunch entry tries to object the fact written by the article by quoting google trends worldwide. But (including the graph on top of the article) it is a typical failure on measuring Japanese web access statistics: it does not reflect mobile web access. It is already explained (in Jaanese) very well. The simple fact is that it is becoming less ambient through Alexa, google trends or whatever similar.

SNSes are often domain specific

We would have seen similar phenomena in everywhere else. In China it is QQ. Orkut quickly became SNS for Brazil. There is no universal best.

What other SNSes find business chances in non-mainland countries is some specific purpose. For example, MySpace in Japan is good for producing musicians with its rich UI (many of them would also use mixi as well though).

You Mono/.NET users do NOT use XML, because you don't really know XML

Are you using XML for general string storage? If yes, most of you are likely wrong. If you do not understand why the following XML is wrong and how you can avoid it, do NOT use XML for your purpose.

<xml version="1.0" encoding="utf-8"> <root>I have to escape \u0007 as </root>

If you gave up answering by yourself, read what Daniel Veillard wrote 6 years ago.

System.Json

I read through Scott Guthrie's entry on Silverlight 2.0 beta2 release and noticed that it ships with "Linq to JSON" support. I found it under client SDK. The documentation simply redirects to System.Json namespace page on MSDN.

So, last night I was a bit frustrated and couldn't resist. I'm not sure if MS implementation should work like this yet though.

(You can build it only manually right now; "cd mcs/class/System.Json" and "make PROFILE=net_2_1". The assembly is under mcs/class/lib/net_2_1. You can't do "make PROFILE=net_2_1 install".)

I picked up a sample on another Linq to JSON project to try mine - that is, James Newton-King's JSON.NET. At first, I simply replaced type names and it didn't work. The problematic lines are emphasized below:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Json;

using JsonProperty = System.Collections.Generic.KeyValuePair<

string, System.Json.JsonValue>;

public class Post

{

public string Title;

public string Description;

public string Link;

public List<string> Categories = new List<string> ();

}

public class Test

{

public static void Main ()

{

List<Post> posts = new List<Post> ();

posts.Add (new Post () { Title = "test", Description = "desc",

Link = "urn:foo" });

JsonObject rss =

new JsonObject(

new JsonProperty("channel",

new JsonObject(

new JsonProperty("title", "Atsushi Eno"),

new JsonProperty("link", "http://veritas-vos-liberabit.com"),

new JsonProperty("description", "Atsushi Eno's blog."),

new JsonProperty("item",

new JsonArray(

from p in posts

orderby p.Title

select (JsonValue) new JsonObject(

new JsonProperty("title", p.Title),

new JsonProperty("description", p.Description),

new JsonProperty("link", p.Link),

new JsonProperty("category",

new JsonArray(

from c in p.Categories

select (JsonValue) new JsonPrimitive(c)

))))))));

Console.WriteLine ("{0}", rss);

JsonValue parsed = JsonValue.Parse (rss.ToString ());

Console.WriteLine ("{0}", parsed);

}

}

Unlike Linq to JSON in JSON.NET, JsonObject and JsonArray in System.Json do not accept System.Object as argument. Instead, it requires strictly-typed generic IEnumerable of JsonValue. That is, for example, IEnumerable<JsonPrimitive> is invalid. Thus I was forced to add explicit cast in my linq expression.

It may be improved in SL2 RTM though. Linq to XML classes accept System.Object argument to make it simple (and well, yes, sort of error-prone).

no passionate SOAP supporter in Mono yet

Two years ago I once worked on System.Web.Services complete 2.0 API. That was the worst shamest work I ever had (I hate SOAP). The work was done two years ago, and then there has been no maintainer since the completion. If there is anyone who loves SOAP, he or she can be a hero or heroine.

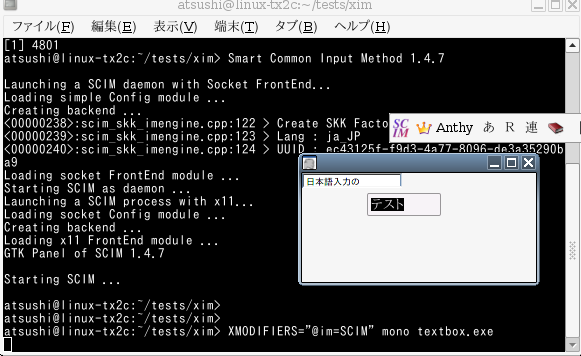

XIM support on Windows Forms

Recently Jonathan (Pobst) announced that our Winforms became feature complete.

Besides feature completeness, there is another important change in the next mono release. We were not ready for multi byte character input. Since we use X11 for Winforms on Linux, we have to treat multi byte input as special case.

We had some code that handles XIM but that was not for text input support. Since there was no one in the winforms team who lives in multi byte land, we hadn't take care of it.

I was not familiar with those stuff, so it took somewhat long time, but lately I got it practically working. I use scim and Atok X3 (it is one of popular traditional commercial Japanese IM engine), which show different behavior / use of X.

The style is over-the-spot (like .NET) and hence it is not as decent as Gtk (i.e. on-the-spot). Also, it supports only XIM (it would be possible to support better IM frameworks like IIIMF). But for now I'm content with a working international input support.

So if you are living in multi byte land, try the next release or daily build version of mono and feel happy :-)

Linq to DataSet

Since (I thought) I'm done with winforms XIM support, I moved away and started working on System.Data.DataSetExtensions two days ago. Now it's feature complete.

WebHttpBinding - my unexpected Hack Week outcome

Novell had the second "Hack Week". At the beginning I never thought that I was going to implement WebHttpBinding from .NET 3.5 WCF (I was planning to play around some XSLT stuff but soon changed my mind). Actually I have to say, it is not an outcome from just a week - from our patch mail lists I seem to have spent about two weeks. So, I'm cheating ;-)

Anyhow, the Hack Week is over, and now I have partly implemented WebHttpBinding and its family, with a (simple) sample pair of a client and a server that sort of work.

Note that it is (and our WCF classes are) immature and not ready to fly yet.

For details

Want to know the details? Are you serious? OK ... so we have:

- JsonReaderWriterFactory and JSON reader and writer implementations (which supports "__type" as runtime type)

- DataContractJsonSerializer, which is JSON reader/writer-friendly implementation of XmlObjectSerializer

- WebHttpBinding and WebMessageEncodingBindingElement which are to create MessageEncoder that 1) reads stream of JSON or XML into a Message, and/or 2) writes Message into JSON or XML

- UriTemplate and family, which are 1) to retrieve request parameters from its requested HTTP GET URL and/or 2) to generate HTTP GET request URL.

- WebHttpBehavior, which is to provide HTTP GET support by overwriting IClientMessageFormatter and IDispatchMessageFormatter (they are used for example to convert GET URL from and to the parameters for the runtime method (MethodInfo) call.

- QueryStringConverter, which is used to convert request parameter string (retrieved by UriTemplate) to its expected Type.

- WebHttpDispatchOperationSelector, which works as IDispatchOperationSelector and makes use of UriTemplateTable to find out matching a service operation based on the HTTP request.

... and couple of other bits (such as WebChannelFactory or WebServiceHost, which are really cosmetic).

We still don't have couple of things (I am enthusiastic for explaining how we are incomplete :/) :

- configuration support

- JsonQueryStringConverter. It's just not done yet.

- WebScriptEnablingBehavior (and hence ASP.NET AJAX integration)

- WebOperationContext and its helpers. I'm not sure how I can use them (especially when to instantiate IExtension<OperationContext>).

Here I haven't mentioned everything in System.ServiceModel.Syndication namespace, which is already done.

I also have current class status of System.ServiceModel.Web.dll in Olive.

Stopping making copyright law worse than before

Congraturations Canadian Citizens!

We, MIAU, are also fighting right now. We are so busy on preparing for the upcoming symposium which is to be held on 26th.

Syndication API

Wow, I haven't written anything for 6 months. Actually I had been quite busy(?) on several things (namely founding MIAU). In Mono land I had been working on 2.0 API completion those days.

Anyways. At the end of Mono Summit last month, Miguel sorta asked me to hack Syndication API in .NET 3.5, which will be likely part of Silverlight 2.0. So, after some terrible experience in trip back to home (I have lost my buggage on my returning flight, and I had some bad stomachache for more than a week ...), I have started working on it (while I'm having sparse work days and a lot of off days this month). And now, System.ServiceModel.Syndication is almost done.

(... no, you don't have to waste your precious time on learning WCF. You can use the Syndication API almost independently.)

Moved

This blog was moved. Well, only literally, and the same contents still appear, as long as it is blog text. The rental hosting server I used to use, stepserver.jp, had a critical accident that all files entirely vanished. This is the worst shutdown for me and hence let me decide move to other place.

The firefox add-ons that cause performance down

Today I attended to Firefox devcon Summer 2007 Tokyo. There was more than 150 attendees, which was pretty big for such a conference for an application. I heard that even meetings in Mountain View were not like this. Japanese mozilla community certainly seems to be big.

It is nothing to do with the conference above, but this anonymous guy showed an interesting analysis on which Firefox add-ons cause performance down, with three test pages (6 level tables, 7 level tables and JS-CPU bench). It is written in Japanese so most of you wouldn't be able to read it, but the culprit add-ons can be seen as listed in a table in the middle of the page (the table without "ok"). The biggest one was AdBlock Plus. His add-on adjustments resulted in about 4x perf. boost:

- disable Firebug

- replace Linkification with Text Link

- replace IETab with IEView

- replace Google Toolbar with Googlebar Lite

- replace noScript with McAfeeSiteAdvisor or manually configure Javascript

- disable Greasemonkey unless you actually use it. Stylish works more efficiently than AdBlock or user scripts.

According to this guy, (I'm not sure if it is true but) it seems that IPv6 support is said a bad boy, but it is not true. Interesting.

olive XLinq updates

During Japanese holiday week (aka Golden Week), I made significant updates to our XLinq stuff (System.Xml.Linq.dll) in "olive" tree. It has been about 1 year and half since the first checkin, and except for some cosmetic changes it had been kept as is.

It still lacks couple of things such as xsd support and XStreamingElement, but hopefully it became somewhat functional. And from the public API surface by corcompare it is largely done.

Miguel and Marek has been adding some of C# 3.0 support to the compiler, and I heard that there was another hacker who implemented some type inference (var) stuff, so at some stage we will see Linq to XML in action (either in reality or in test tube).

Other than that I did almost nothing this holiday week, except for spending time on junk talk with Lingr fellows. The worst concern about lingr (which is agreed among us, including one of the four lingr dev. people) is lowering productivity due to too much fun talking with them, especially when it is combined with twitter, which is somehow becoming bigger in Japan ...

Speaking of twitter, I was asked some mono questions (mostly about bugs) by a Japanese hacker and ended up to know that he wrote TwitterIrcGateway and was trying to run it with mono. It is a WinForms application (for task tray control) which requires some soon-to-be-released v1.2.4 features but it just worked out of the box on my machine. It is so useful.

Bugs and standard conformance, when it comes from MS

My recent secondary concern is about the lack of legal mind and English-only nationalism in American House of Representatives on "Ianfu" issue, as well as the concern on Nanking massacre denialists's attack on the film 'Nanking'. But both are political topics that I usually don't dig in depth...

My recent primary concern is about the essential private rights in Japanese private law system, which seems correctly imported from German pandecten and incorrectly adopted to Japanese law. My recent reading Japanese book "the distance between freedom and privilege", which is based on Carl Schmidt and talks about the nature of limitation on (property) rights, was also nice. I'm hoping that those arguments would decrease some basic misunderstandings on the scope of copyrights in principle.

Anyways.

As some of mono community dudes know, I always fight against those who only consider .NET compatibility than conformance to standards. They end up to try to justify date function to be incorrect even in the proposal draft of standards just because of a bug in MS Excel (as well as Lotus), which doesn't really make sense.

Anyways what I remembered on seeing Miguel's doubt on MS compiler (csc.exe) behavior was my own feedback to MS that csc has ECMA 334 violation on comparison between System.IntPtr and null (I believe System.IntPtr.Zero is introduced exactly for this issue). I reported it about one year ago when I was trying to build YaneSDK.NET (an OpenGL-based game SDK) on mono.

This bug had left until Jan 31, and then it was closed without any comments. I quickly reopened it and thus it is still active. Of course I totally don't mind that .NET has bugs (having live / long-standing issues is very usual) including ECMA violations.

Since I have reported couple of .NET bugs, sometimes I saw "interesting" reactions. In this case, MS says that this is "a bug in ECMA spec" so that "they will come up with a new spec". They think they "own" the control of the specification (I'm not talking about the ownership of the copyrights on the specification).

After all, it seems that I'm always concerned about rules and justifications.

Kind of back

Looks like six months have passed since my last entry. I was not dead at least literally. Lately my interest has been on some legal stuff (especially criminal law) which is of course in Japanese context. Anyways ...

So, I have been working on WCF implementation now. The Olive plan was announced back in October, but the work was started much earlier, like in Autumn 2005. Though as you would guess, it has been flaky - I haven't chosen WCF over helping Winforms last spring, or over ASP.NET 2.0 Web Services last winter (and it's still ongoing).

BasicHttpBinding was already working last spring, and ClientBase was working last summer. Ankit had been working on WSDL support and ASP.NET .svc handler, until we decided some work reassignment during the Mono Meeting days in October. (He is now working on MonoDevelop - am so envious! ;-) MonoDevelop hacking is a lot of joy than WS-* sack.)

After making ClientBase working, my primary task in this area has been to get WS-Security (SecurityBindingElement) working. WS-Security and WS-SecurityPolicy are nightmare. There is a lot of work in this area. I dug several security classes in depth to make my sample code simpler, simpler and simpler. Now I have very primitive pair of service and client. And after several bugfixes primarily in System.ServiceModel.dll and System.Security.dll, finally I got my WS-Security enabled message successfully consumed by WCF :-)

Probably none of you would understand why I am so excited about it since the code still is not practically working yet, but I am happy right now. I would need a lot more words to explain why, and I doubt it is worthy :|

Microsoft Permissive License

As I often write here, the worst legal violation I dislike is False Advertising.

Did you ever know that there are more than just one Microsoft Permissive License? I only knew this one which is widely argued that it could be regarded as conformant to Open Source Definition.

I originally disliked the idea that this MS-PL labelled as one of that "Shared Source License", as it rather seemed an attempt to justifying existing restricted shared source licenses, while I like that MS-PL terms in general.

Now, read this Microsoft Permissive License. It is defined as:

(B) Platform Limitation- The licenses granted in sections 2(A) & 2(B) extend only to the software or derivative works that you create that run on a Microsoft Windows operating system product.

This is an excerpt from Shared Source Initiative Frequently Asked Questions:

Q. What licenses are used for Shared Source releases?A. [...] Microsoft is making it easier for developers all over the world to get its source code under licenses that are simple, predictable, and easy to understand. Microsoft has drafted three primary licenses for all Shared Source releases: the Microsoft Permissive License (Ms-PL), the Microsoft Community License (Ms-CL), and the Microsoft Reference License (Ms-RL). Each is designed to meet a specific set of developer, customer, or business requirements. To learn more about the licenses, please review the Shared Source Licenses overview.

(Emphasis by myself.)

Now I wonder if this is the exact reason why Microsoft did not submit MS-PL as Open Source Definition conformant license.

Now I ponder the legal affection on this behavior (as I was a law student). If it is not regarded as illegal false advertising, it would result that any kind of license statements lose reliability. If it is regarded as illegal, it would result that any kind of authors are imposed to keep their licenses every time they modified. Oh, maybe not all. It could be just limited to wherever SOX laws apply. Which is better?

CCHits

Recently I got to know CCHits, a digg-like music ranking website specialized to which are available in Creative Commons license (as a normal ex law student I am a big fan of CC). This is what I really wanted to see or build. Since I just moved to my new room and am without fast connection I cannot practically use it right now, but I really want to try it asap. Music is one of the things that I dream to be free, like cooking recipe where no rights lie and there are still improvements. There is a lot of boring commercial songs that are still used just to make some sounds in shops and waste money.

Now what lacks there is the ranking counts. I'd like to see at least hundreds of votes. When I'm ready, I'd start from listening high-rated songs and add more hits to make this website grown up enough like digg.

There are some great search engines to help finding CC musics: Yahoo! and Google. I love those search engines, not because they are innovative, but because they recognizes how freedom represented as CC is important. There are some other search engines which are rather interested in their own "innovation", but is not something that I am interested.

It also reminded me of what Microsoft people said about Google Spreadsheets. I think such argument that "Google Spreadsheets is not innovative because it is what google acquired" is really ignorable. That made me feel that Microsoft altitude is 10 years behind Google. I am rather interested in the company principle behind (say) Google Book Search.

OpenDocument validator

Someone in the OpenDocument Fellowship read my blog entry on RelaxngValidatingReader and OpenDocument, and then asked me if I can create example code. Sure :-) Here is my tiny "odfvalidate" that validates .odt or its content xml files (any of content.xml, styles.xml, meta.xml, or settings.xml should be fine) using the RELAX NG schema.

I put an example file to validate, actually simply converted OpenDocument specification document by OO.o 2.0. When you validate the document, it will report you a validation error which is really an error in content.xml.

Mono users just can get that simple .cs file and OpenDocument rng schema, and then compile it as "mcs -r:Commons.Xml.Relaxng -r:ICSharpCode.SharpZipLib". Microsoft.NET users can still try it, by downloading those dll files listed there.

Beyond my tiny tool, I think AODL would be able to use it. In the above directory I put a tiny diff file for AODL code. Add Commons.Xml.Relaxng.dll as a reference, add OpenDocument-schema-v1.0-os.rng as a resource and then build. I haven't actually run it so it might not work though.

If any further questions, please feel free to ask me.

We need WinForms bugfixes

Lately I was mostly spending my time on bugfixing core libraries such as corlib and System.dll. Yesterday I got to know that we need a lot of bugfixes in our MWF (managed win forms).

So I have started to look around existing bug list and work on it. At first I thought that it is highly difficult task: to extract simple reproducible code from problematic sources, and of course, to fix bugs. Turned out that it is not that difficult.

Actually, it is very easy to find MWF bugs. If you run your MWF program, it will immediately show you some problems. What I don't want you to do at this stage is just to report it as is. We kinda know that your code does not work on Mono. It's almost not a bug report. Please try to create good bug report. Here I want to share my ideas on how you can try creating good MWF bug reports and bugfixes.

(Dis)claimer: I'm far from Windows.Forms fanboy. Don't hit me.

the background

Mono's Windows Forms implementation is WndProc based, which is implemented on top of XplatUI framework. For drawing we have Theme engine which is implemented on top of System.Drawing which uses libgdiplus on non-Windows and simply GDI+ on Windows.

Thanks to this architecture, if you have both Windows and Linux (and OSX) boxes, you can try them and see if all of them fail or not. In general we have XplatUI implementation and Theme implementation for each environment (XplatUIWin32, XplatUIX11, XplatUIOSX and ThemeClearLooks, ThemeGtk, ThemeNice, ThemeWin32Classic), so the bug you are tring to dig might be bugs in there (well, also note that if you have wrong code such as Windows-path dependent code, it won't work. Here I continue winforms-related topic). To find out the culprit layer:

- If only non-Windows box fail, then it might be bugs in libgdiplus or Cairo as its background. If Only OSX fail, then it is likely to be some architecture (big endian etc.) related bug.

- Otherwise it is likely to be misimplementation of our class libraries including MWF controls. System.ComponentModel and System.*Design classes are also likely to be culprit.

See our WinForms page for more backgrounds.

find the culprit

The first stage is to find out the problematic component.

- We fix several bugs everyday. So always try the latest mono, mcs and libgdiplus from svn. Trying MWF on stable releases (1.1.13.x) is almost helpless. The code is rapidly improved by the MWF team.

- Compile your code with -debug option. It will result in more informative stack trace (file and line).

- Check existing bugs. When you hit a problem, there would be the same or similar problem.

- Reproducible bugs are much better than non-reproducible bugs. Try to find a consistent way to reproduce the bug. On bug report, having the procedure is much more helpful. It is also nice if you can provide reproducible steps for existing bug reports that do not have repro steps.

- Try simpler controls for whatever you found bugs as well. For example, if ComboBox focus does not work, there is likely another bug on focus handling.

- sometimes trying indifferent controls also helps. It might not be a bug in the exact control you are touching, but might be a bug in the container, MDI, Form or drawing in general.

create a simple repro code

Once you think you found the culprit control, try to create a simpler reproducible code. It does not only help debugging (those bugs without "readable" code are not likely be fixed soon), but also avoids your mistaken assumption on bugs.

When you try to create simple repro code, you can reuse existing simple code collection.

If you are not accustomed to non-VS.NET coding, Here is my example code:

using System;

using System.Windows.Forms;

public class Test : Form

{

public static void Main ()

{

Application.Run (new Test ());

}

public Test ()

{

#region You can just replace here.

ComboBox cb = new ComboBox ();

cb.DropDownStyle = ComboBoxStyle.DropDownList;

cb.DataSource = new string [] {"A", "B1", "B2",

"C", "D", "D", "Z", "T1", "B3", "\u3042\u3044"};

Controls.Add (cb);

#region

}

}

Compile it with mcs -pkg:dotnet blah.cs.

bugfixing

After you create good bug reports (also note that we have a general explanation on it), it wouldn't be that difficult to even fix the bug :-) Actually we receive a lot of bug reports, while we have relatively few bugfixors.

As Ximian used to be GNOME company, external skilled Windows developers would know much better on how to implement MWF controls and fix bugs. In my case I even had to start bugfixing from learning what kind of WindowStyles there are (WS_POPUP etc. that we define in XplatUIStructs.cs).

My way of debugging won't be informative... Anyways. When I try to fix those MWF bugs, I just use a text editor and mono --trace. My frequently used option is --trace=T:System.Windows.Forms.blahControl. I mostly spend time on understanding the component I'm touching.

When you created a patch (hooray!), adding [PATCH] prefix on the bug summary would be nicer so that we can find possible fixes sooner.

Another NVDL implementation

Lately Makoto Murata told me that another NVDL implementation appeared. I am extraordinary pleased. Before that there was only my NVDL implementation, which means that NVDL has not been worthy of the name of a "standard".

It is also awesome that this implementation is written in Java, which means that it has different fields of users. Now I wonder, when will C implementation be there? :-)

BTW Makoto Murata also gave me his NVDL tests which is in preparation and I ended up to make several updates on my implementation. Some tests makes me feel something like "uh? Is NVDL such a specification?" i.e. I had some misunderstanding on the specification. With it I could also update a few parts to the FDIS specification (I wrote my implementation when it was FCD). As far as I know the tests will be released under a certain open source license (unlike BumbleBee XQuery test collection).

the Mono meeting in Tokyo

We have finished the latest Mono meeting in Tokyo targetting Japanese, with Alex Schonfeld of popjisyo.com. (we call it "the first meeting" in Japanese but as you know we used to have some non-Japanese ones.)

At the time we announced it [1] [2] I personally expected 5 or 6 people at best so that we can make somewhat in-depth discussion about Mono, depending on the attendees. What happened was pretty different. We ended up to welcome 16 external attendees (including akiramei), so we rented the entire room in the bar and mostly spent time from our presentations and Q & A between them and me. We were so surprised that such tiny pair of announcements were read by so many people.

It was a big fun - there was an academic guy who writes his own C#/VB compilers referencing mcs/gmcs. There was a guy who wants to know some details about JIT. I also met a person who was trying my NVDL validator. There was a number of people who are interested in running their applications under Mono (well, Alex has also long been interested in ASP.NET 2.0 support). Many guys were interested in how Mono is used in production land. There were also many guys claiming that Mono needs easiler debugging environment. Some people are interested in how Mono is ready for commercial support.

As usual I have some regressions on the progress but the meeting went so well. It is very likely that we plan another Japanese Mono meeting.

opt. week

Happy new year (maybe in Chinese context).

About a week and more ago Miguel told me that there is summary page for performance comparison for Java, Mono and .NET by Jeswin.P.

It is always nice to see that someone is kind enough to provide such information :-) The numbers however are not good for Mono, so I kinda started attempt to enhance mono XML performance.

Miguel told me that I can't know how those performance improvement record is impressive to some users (well, I totally agree; I was not surprised to see the perf. results). So I started to record that XMLmark results, now using NPlot (for Gtk# (0.99)). You can see sort-of-daily graph, like:

As you see, this measuring box has some errors, but it won't matter so much. Anyways, not bad. The most effective patch was from Paolo.

The next item I want(ed) to optimize was Encoding. I have already done some tiny sort of optimization in UTF8Encoding (the latest svn should be about 1.2x-1.3x faster than 1.1.13), but it needs more love. It is ongoing.

The best improvements I have made was not any of the above. I had been optimizing my RelaxngValidatingReader by feeding OpenDocument grammar which is about 530 KB and some documents (I extracted it and converted to .odt using OO.o 2.0 and extracted content.xml).

At first I couldn't start from that massive schema. I had to fix several bugs. I spent most of hacking time on it last winter vacations. It became pretty solid. Those new year days I started to validate some OpenDocument content.

I started from one random pickup of OASIS docs, maybe this one, which was about 70KB, 1750 lines of instance. At svn r54896 it almost stopped around line 30: too bad start. I started to read James Clark's derivative algorithm again, and started several attempt to optimize it. After some step-by-step improvements, at r55497 the validator finally reached to the end for the first time. I still needed further optimization since it cost me about 80 seconds, but one hour later it went nearly 20x faster, at r55498.

After having 70KB document validated within 5 seconds, I thought it was time to try the biggest one - OpenDocument 1.0 specification. I added indentation to this 4MB of content.xml and it became 5MB. After fixing XML Schema datatype bug that was found at line 4626, I had to make further optimization, since it was eating up the memory, looked almost infinite. I dumped the list of total memory consumption for each Read(), but the fix (r56434) was stupidly simple. Anyways finally I could validate 5MB of an instance in 6 seconds.

So, now it is possible enough to validate OpenDocument xml using RelaxngValidatingReader, for example with AODL, An OpenDocument Library.

Maybe it's time to fix remaining 8 bugs found by James Clark's test suite - 7 are from XLink 5.4 violation, and 1 is in unique name analysis.

(sorry but no plot for RELAX NG stuff, it is kind of non-drawable line ;-)

Lame solution, better solution

During my in-camera vacation work, I ended up to fix some annoying bugs in Commons.Xml.Relaxng.dll. Well, they were not that annoying. I just needed to revisit section 4.* and 7.* of the specification. Thanks to my own God, I was pretty productive yesterday to dispose most of them. After r54882 in our svn, the implementation became pretty better. Now I have 13 out of 373 testcases from James Clark's test suite. Half of them are XLink URI matter and most of the remaining bits are related to empty list processing (<list><empty></list>, which usually is not likely to happen).

Speaking of Commons.Xml.Relaxng.dll, I updated my (oh yeah here I can use "the" instead of "my" for now) NVDL implementation to match the latest Final Draft International Standard (FDIS). The specification looks fairly stable and there were little changes.

MURATA Makoto explains how you can avoid silly processor-dependent technology (not) to validate xml:*, such as System.Xml.Schema.XmlSchemaValidationFlags. Oh, well you don't have to worry about that there is no validator for Java - someone in rng-users ML wrote that his group is going to implement one. It would be even nicer if someone provide another implementation for libxml (it won't make sense if I implement some).

I hope I have enough time to revisit this holiday project (yeah it is mostly done in my weekends and holidays) rather than wasting my time on other silly stuff.

Holy shit

Wow, I haven't been blogged for a while. It just tells that I have done nothing that long. And am going to hibernate this year (having summer vacations next two weeks).

I wanted to get somewhat useful schema before writing some code for Monodoc. After getting lengthy xsd, I decided to write dtd2rng based on dtd2xsd (this silly design is because I cannot make Commons.Xml.Relaxng as System.Xml dependency) and got a smart boy. (Well, to just get converted grammar like this, you could use trang).

We are going to have Mono meeting in Tokyo next Monday. The details are not set yet, but if any of you are interested, please feel free to ask me the details. (it is likely to be just having dinner and drinking, heh).

Last month I went to Kyoto (Japan) with Dan (Mills, aka thunder) and found a nice holy shit:

Unfortunately, I lost this religious treasure last week :-( I was pretty disappointed. It must be from I entered a Starbucks to I got on a train (I noticed that I lost it at that time). So I was wondering if I should ask those Starbucks partners like "didn't you guys see my shit here?" All my friends whom I asked for advice were saying I should do, so I ended up asking the partners.

"I might lost my cell accessory here."

"What colour was it?"

"Well, it was a golden cra..."

"Ok, please wait for a while"

... and then she went into staff room, and said there was no such one. After a few seconds of wondering if I should be more descriptive, but was reminded. Shame.

don't use DateTime.Parse(). DateTime.ParseExact() instead

It seems that very few people know that DateTime.Parse() is COM dependent, evil one. Since we don't support COM, those COM dependent functionality won't work fine on Mono.

Moreover, even in Microsoft.NET, there is no assurance that such string that DateTime.Parse() accepts on your machine is acceptable under other machines. Basically DateTime.Parse() is for newbies who don't mind unpredictable behavior.

Yes,

instead of

is quite annoying because of its lengthy parameters. If you think so, you can push Microsoft to provide an equivalent shortcut, like I did. But first thing you should do right now is to prepare your own MyDateTime.Parse() that just returns the same result of the above. It's also true to several culture-dependent String methods (IndexOf, LastIndexOf, Compare, StartsWith, EndsWith - all of them are culture sensitive, even with InvariantCulture) to avoid unexpected matches/comparisons (remember that "eno" and "eno\u3007" are regarded as equivalent).

Default encoding for mcs

Today I checked in a patch for mcs that changes the default encoding of the source file, to System.Text.Encoding.Default instead of Latin1. Surprisingly we have been using it as the default encoding, regardless of the actual environment. For example, Microsoft csc uses Shift_JIS on my Japanese box, not Latin1 (Latin1 is even not listed as candidate when we - Japanese - save files in text editors).

This change brought confusion, since we have several Latin1-dependent source files in our own class libraries, and on modern Linux environment the default encoding is likely to be UTF8, regardless of the region and the language (while I was making this change on Windows machine). Thus I ended up to add /codepage:28591 to some classlib Makefiles at first, and then to revert that change and started a discussion.

I felt kinda depressed, on the other hand I thought it's kinda interesting. I must be making such a change that makes sense, but it is likely to bring confusion to EuroAmerican people. Yes, that is what we (non-EuroAmericans) had experienced - we could not compile sources and used to wonder why mcs can't, until we tried to use e.g. /codepage:932. When I went to Japanese Novell customer to help deploying ASP.NET application on SuSE server, I had to ask them to convert the source to UTF-8 (well now I think I could ask to set fileEncoding in web.config instead, but anyways not sure whether CP932 encoding worked fine or not).

If mcs does not premise Latin1, your Latin1-dependent ASP.NET applications won't run fine in the future version of mono on Linux. Even though mcs compiled sources with the platform-dependent default encoding instead of Latin1, it does not mean that your ASP.NET applications written in your "native" encoding (on Windows) will run fine on Linux without modifications. As I wrote above, the default encoding is likely to be UTF8 and thus you will have to explicitly specify "fileEncoding" in your web.config file.

Sounds pretty bad? Yeah, might be for Latin1 people. However, it has been happening on non-Latin1 environment. Thus almost no one is likely to be saved. But going back to "Latin1 by default" does not sound a good idea. At least it is not a culture-neutral decision.

There is a similar but totally different problem on DllImportAttribute.CharSet when the value is CharSet.Ansi. It is locale dependent encoding on Microsoft.NET, but in mono it is always UTF-8. The situation is similar, but here we use platform-neutral UTF-8 (well, I know that UTF-8 itself is still not culture-neutral, as it definitely prefer Latin over CJK. It lays Latin letters out in 2 byte areas, while most of CJK letters are in 3 byte areas, including only about 200 letters of Hiragana/Katakanas).

Managed collation support in Mono

Finally I checked in managed collation (CompareInfo) implementation in mono. It was about four months task, while I mostly spent my time just to find out how Windows collation table is composed. If Windows is conformant to Unicode standards (UTS #10, Unicode Collation Algorithm, and more importantly Unicode Character Database), it would have been much shorter (maybe in two months or so).

Even that I spent extra months, it resulted in a small good side effect - We got the Fact about Windows collation, which had been said good but in fact turned out not that good.

The worst concern now I have about .NET API is that CompareInfo is used EVERYWHERE we use corlib API such as String.IndexOf(), String.StartsWith(), ArrayList.Sort() etc. etc... They cause unexpected behavior on your application code which does not always have to be culture sensitive. We want them only under certain situations.

Anyways, now managed collation is checked in, though right now it's not activated unless you explicitly set environment variable. The implementation is almost close to Windows I believe. I started from SortKey binary data structures actually that was described in the book "Developing International Software, Second Edition"), sorting out how invariant GetSortKey() returns computed sort keys for each characters to find out how characters are categorized and how they can be drawn from Unicode Character Database (UCD). Now I know most of the composition of the sortkey table, which also uncovered that Windows sorting is not always based on UCD.

From Unicode Normalization (UTR #15) experience (actually it was the first attempt for my collation implementation to implement UTR #15, since I believed that Windows collation must have been conformant to Unicode standard), I made quick codepoint comparison optimization in Compare(), which made Compare() for the equivalent string 125x faster (in other words it was so slow). It became closer to MS.NET CompareInfo but still far behind ICU4C 3.4 (it is about 2x quicker). I'm pretty sure that it could be faster if I can spend more memory, but it is corlib where we should mind memory usage.

I tried some performance testing. Note that they are just some cases and collation performance is heavily dependent on the target strings. Anyways the results were like below and the code are here. (The numbers are seconds):

CORRECTION: I removed "Ordinal" comparison which was actually nothing to do with ICU. It was mono's internal (pretty straightforward) comparison icall.

| Options | w/ ICU4C 2.8 | w/ ICU4C 3.4 | Managed |

|---|---|---|---|

| None | 0.671 | 0.391 | 0.741 |

| StringSort | 0.671 | 0.390 | 0.731 |

| IgnoreCase | 0.681 | 0.391 | 0.731 |

| IgnoreSymbols | 0.671 | 0.410 | 0.741 |

| IgnoreKanaType | 0.671 | 0.381 | 0.741 |

MS.NET is about 1.2x faster than ICU 2.8 (i.e. it is also much slower than ICU 3.4). Compared to ICU 3.4, it is much slower, but Compare() looks not so bad.

On the other hand, below are Index search related methods (I used IgnoreNonSpace here. The numbers are seconds again):

UPDATED: after some hacking, I could reduce the execution time by 3/4.

| Operation | w/ ICU4C 2.8 | w/ ICU4C 3.4 | Managed |

|---|---|---|---|

| IsPrefix | 1.171 | 0.230 | 0.231 |

| IsSuffix | 0 | 0 | 0 |

| IndexOf (1) | 0.08 | 0.08 | |

| IndexOf (2) | 0.08 | 0.08 | |

| LastIndexOf (1) | 0.992 | 0.991 | |

| LastIndexOf (2) | 0.170 | 0.161 |

Sadly index search stuff looks pretty slower for now. Well, actually it depends on the compared strings (for example, changing strings in IsSuffix() resulted in that managed collation is the fastest. The example code is practically not runnable under MS.NET which is extremely slow). One remaining optimization that immediately come up to my mind is IndexOf(), where none of known algorithms such as BM or KMP are taken... They might not be so straightforward for culture-sensitive search. It would be interesting to find out how (or whether) optimizations could be done here.

the "true" Checklists: XML Performance

While I knew that there are some documents named "Patterns and Practices" from Microsoft, I didn't know that there is a section for XML Performance. Sadly, I was scarcely satisfied by that document. The checklist is so insufficient, and some of them are missing the points (and some are even worse for performance). So here I put the "true" checklists to save misinformed .NET XML developers.

- Design Considerations

- Avoid XML as long as possible.

- Avoid processing large documents.

- Avoid validation. XmlValidatingReader is 2-3x slower than XmlTextReader.

- Avoid DTD, especially IDs and entity references.

- Use streaming interfaces such as XmlReader or SAXdotnet.

- Consider hard-coded processing, including validation.

- Shorten node name length.

- Consider sharing NameTable, but only when names are likely to be really common. With more and more irrelevant names, it becomes slower and slower.

- Parsing XML

- Use XmlTextReader and avoid validating readers.

- When node is required, consider using XmlDocument.ReadNode(), not the entire Load().

- Set null for XmlResolver property on some XmlReaders to avoid access to external resources.

- Make full use of MoveToContent() and Skip(). They avoids extraneous name creation. However, it becomes almost nothing when you use XmlValidatingReader.

- Avoid accessing Value for Text/CDATA nodes as long as possible.

- Validating XML

- Avoid extraneous validation.

- Consider caching schemas.

- Avoid identity constraint usage. Not only because it stores key/fields for the entire document, but also because the keys are boxed.

- Avoid extraneous strong typing. It results in XmlSchemaDatatype.ParseValue(). It could also result in avoiding access to Value string.

- Writing XML

- Write output directly as long as possible.

- To save documents, XmlTextWriter without indentation is better than TextWriter/Stream/file output (all indented) except for human reading.

- DOM Processing

- Avoid InnerXml. It internally creates XmlTextReader/XmlTextWriter. InnerText is fine.

- Avoid PreviousSibling. XmlDocument is very inefficient for backward traverse.

- Append nodes as soon as possible. Adding a big subtree results in longer extraneous run to check ID attributes.

- Prefer FirstChild/NextSibling and avoid to access ChildNodes. It creates XmlNodeList which is initially not instantiated.

- XPath Processing

- Consider to use XPathDocument but only when you need the entire document. With XmlDocument you can use ReadNode() but no equivalent for XPathDocument.

- Avoid preceding-sibling and preceding axes queries, especially over XmlDocument. They would result in sorting, and for XmlDocument they need access to PreviousSibling.

- Avoid // (descendant). The returned nodes are mostly likely to be irrelevant.

- Avoid position(), last() and positional predicates (especially things like foo[last()-1]).

- Compile XPath string to XPathExpression and reuse it for frequent query.

- Don't run XPath query frequently. It is costy since it always have to Clone() XPathNavigators.

- XSLT Processing

- Reuse (cache) XslTransform objects.

- Avoid key() in XSLT. They can return all kind of nodes that prevents node-type based optimization.

- Avoid document() especially with nonstatic argument.

- Pull style (e.g. xsl:for-each) is usually better than template match.

- Minimize output size. More importantly, minimize input.

What I felt funky was that they said that users should use XmlValidatingReader. With that they can never say that XmlReader is better than SAX on performance, since it creates value strings for every node (so the performance tips are mutually exclusive). It is not evitable even if you call Skip() or MoveToContent(), since skipping validation on skipped nodes is not allowed (actually in .NET 1.0 Microsoft developers did that and it caused bugs in XmlValidatingReader).

DTLL 0.4

Jeni Tennison told me (well, some guys including me) that she put a refresh version of Datatype Library Language (DTLL), which is expected to be ISO DSDL part 5.

Since Mono already has validators for RELAX NG with custom datatype support and NVDL, there would be no wonder if I was trying to implement DTLL support.

The true history was earlier, but it was the last spring that I practically started to write code for DTLL version 0.3. After hacking on the grammar object model, I asked Jeni Tennison for some examples, and she was kind enough to give me a decent one (the latest specification now includes even nicer examples). With that I have finished the grammar object model.

The next was of course, compilation to be ready for validation. But at that stage I was rather ambicious to support automatic CLI type mapping from DTLL datatype. I thought it was possible, but I noticed that DTLL model was kinda non-deterministic ... that is, with that version of DTLL I had to implement some kind of backtracking of already-mapped part of data if the input raw string did not match in a certain choice branch. With some questions (such as why "enumeration" needs indirect designation by XPath), I asked her about the possibility of limitation of the flexibility of item-pattern syntax. In DTLL version 0.3, the definition of "list" was:

... and today I was reading the latest DTLL 0.4, I noticed that:

... so, the entire item-pattern went away (!). I was surprised at her decision, but I'd say, it is nice. List became just a list of strings... I wonder if it really cannot be typed pattern (I might be shooting my foot now). The specification also removed "enumeration", so the pattern syntax became pretty simple. This change means that almost all patterns are treated as regular expressions. I wonder if it is me to blame ;-)

Now I don't think it is possible to automatically generate "DTLL datatype to native CLI type" conversion code, but I think that such conversion code should be provided from DTLL users. So it seems time for me to restart hacking on it...

cyber crime / axml:id

Lately I was researching about trademarks (many Mono hackers know well about what I am talking here). Sadly, it was much deeper than I have expected. Trademarks are just like a tip on an iceberg. So I got contact with Japanese police and told all the facts I got. I want to believe they can handle the case nicely.

Japanese police is nervous on phishing websites. It was not by chance that I found that, since I was researching about trademarks and the system of Japanese trademark (law and the operation of it) is somewhat related to how to acquire phishable(?) domains legally. It's a bit interesting to learn about those buggy legal systems. Maybe I had better start creating patches to fix those bugs, but the bugs are somewhat complicated.

As the first stage, if any of you guys have received such mails from Japanese saying that you should buy the domains and/or trademarks, or you cannot make business advance into Japan, you can get contact with me. I would help your research on whether he or she really have such valid trademarks or not, though you should really ask Japanese lawyers if I said that the applicant does not or cannot own it. Sometimes Japanese agents are idiots so that they cannot even make appropriate research that I can make.

I noticed that xml:id Proposed Recommendation is out. I found that it states that xml:id is incompatible with Canonical XML in Appendices C.

Lately I rarely touch XML stuff so I had few ideas, but it came up to mind that if xml:id were assigned another namespace URI than http://www.w3.org/XML/1998/namespace, the problem would go away. But anyways now that we have exc-c14n, it's a tiny problem.

Also, I don't think .NET developers should worry about that. When someone implemented xml:id and noticed that it is incompatible with Canonical XML, then he or she would ask Microsoft to change their implementation not to mess it, like Microsoft developers lately had to make changes about validation on xml:* attributes. The problem and the solution are so easy. They would welcome such pollusion if it benefits them.

I don't like opt-out messages

Recently I often look back the last productive year. After Mono 1.0 release I have mostly done System.Xml 2.0 bits (editable XPathNavigator, XmlSchemaInference, XmlSchemaValidator, XmlReader.Create() and half-baked XQuery engine ... none of them rock though. I remember I once started to improve DataSet bits (DataView and related things), but now I don't work on them. Maybe something happened this year and I have done nothing wrt xml bits (well, except for NvdlValidatingReader). Maybe I need something interesting there.

...Anyways, here is the final chapter of Train of RantSort.

Train of Sort #5 - Is UCD (not UCA) used? -

I have wondered if Windows collator uses Unicode Character Database, the core of Unicode specification.

Windows treats U+00C6 (Latin small letter AE; Latin smal ligature AE) as equivalent to "ae". There is a character | U+01E3 (http://www.fileformat.info/info/unicode/char/01E3/index.htm) aka Latin small letter AE with Macron whose Normalization Form Decomposition (NFD) is U+00E6 U+0304 where U+0304 is Combining Macron.

So it looks like we can expect that Compare("\u01E3", "\u00E6\u0304") returns 0. However they are treated as different. Windows treats U+01E3 as equivalent to the sequence 'a' 'U+0304' 'e' 'U+0304'.

Similarly, circled CJK compatibility characters are not always related to ("related" actually means nothing but close primary weight though) non-circled one. For example U+32A2 (whose NFKD value is circled U+5199) is sorted next to U+5BEB (traditional Chinese) instead of U+5199 (Japanese and possibly simplified Chinese).

Those facts do not look like because Windows works differently than UCA. And since this NFD mapping comes from Unicode 1.1 that is released in 1993 (earlier than Windows 95) so they also do not look like because of the time lag. I wonder why those differences happen.

In fact recently I wrote about those CJK stuff to Michael Kaplan's blog entry and thanks to his courtesy I got some answers. That sadly seems irrelevant to the problem above, but he had an enlighting point - NFKD often breaks significant bits. I guess he is right (since some NFKD mappings contain hyphens which is treated specially), but I also think it depends on the implementation (am not clear about such cases at this stage).

It reminded me of some kind of contractions - when I read some materials on Hungarian sorting, I tried comparing "Z", "zs", "zzs" and so on. And on seeing sortkeys, I eventually found that "zszs" and "zzs" are regarded as equivalent on .NET. But I still don't know if it is incorrect or not (even after seeing J2SE 1.5 didn't regard them as equivalent). I doubt they are kinda source of destructive operations, but not sure.

... that's all for now (I feel sorry that there is no "conclusion" here). Windows Collation things are still in the black box, and for some serious development we certainly need explicit design to predict what would happen with collations.

Ok, time to hibernate. My writing battery is going to run out.

Train of Sort #4 - sortkey table optimization

With related to sortkey table and logical surface of collation, I think UCA handles things much better than Windows. Well, I didn't like "logical order exceptions" in UCA, but it disappeared in the latest specification.

For "shifted" characters such as hyphens and apostrophe characters, GetSortKey() considers those characters only at the identical weight. In that case, only primary weight is computed as the actual sortkey value (like 01 01 01 01 80 07 06 80 for ASCII Apostrophe where only 06 80 represent that character). BTW there are such pairs of two characters that differ only in thirtiary weight (width sensitivity lies there) such as U+0027 and U+FF07 (full-width Apostrophe). Those sortkeys are regarded as equivalent when StringSort is not specified as CompareOptions flags (it does not happen when you use Compare()).

Windows has no concept of (non-)blocking diacritical marks. Windows just adds diacritical weight of nonspacing characters to that weight of previous primary character. And since the weight does not handle value more than 255, it often overflows and goes back to 0 (BTW the value 0 indicates the end of the sortkey in Windows API). Moreover, the fact means that there could be several pairs of sequences of diacritical marks that are incorrectly treated as equivalent. I recently mentioned one of such case in mono-devel-list.

Windows collation table is not internally optimizable. For example, (1)Hangul Syllables are sorted, being mixed with Jamo. In UCA it is implemented to be optimizable, but on Windows L/V/T forms are mixed depending on character equivalence and no blank values between them, so the large Hangul Syllable block could not be computed but must just be filled in a bulky table. In UCA and CLDR there is "optimize" option to take. Another example is (2)CJK compatibility characters. Those "compatibility" characters can, however, never be compatible with any of CompareOptions flags. Instead they are sorted next to the "compatible" character. However, if there is no such design, the sortkey table could be impressively optimized since the large chunk of CJK ideograph characters could be computed from their codepoints. I think they could be differentiated only in the third weight area (where width and case insensitivity coexist), or the fourth weight area (as well as Japanese Kana, since anyways those characters differ in primary level).

CJK sortkeys and CJK exntension sortkeys are immediately joined. On Windows, CJK ideograph ends at U+9FA5. In the latest Unicode the last CJK ideograph character is U+9FBB. But there is no proper space for U+9FA6-U+9FBB in Windows sortkey table (the sortkey for U+9FA5 is F0 B4 01 01 01 01 and U+F900 has F0 B5 01 01 01 01). The fact tells that it is impossible for CompareInfo/LCMapString to support higher Unicode versions, with existing Windows sortkey design. That is one of the reason that Windows can never support sorting in higher Unicode standards than version 1.0 which makes sense, as long as they keep sortkey values compatible.

Train of Sort #3 - Unicode version inconsistency

I forgot to announce that I had written an introduction to XML Schema Inference (it was nearly a month ago). I tried to describe what XML Schema Inference can do. As a technically-neutral person, I am also positive to describe what it cannot do.

I think this is also applicable to other xml structure inferences. Actually I applied it to my RelaxngInference which is however not complete. RELAX NG is rather flexible and you can add choice alternatives mostly anytime, but the resulting grammar will become sort of garbage ;-) Thus, simply-ruled structure still does make sense.

Ok, here is the third chapter of sort of crap train of sort.

Train of Sort #3 - Unicode version inconsistency

On surfing Japanese web pages, I came across aforum that there was a Japanese people in trouble that String.IndexOf() ignores U+3007 ("ideographic number zero" in "CJK symbols and punctuation") and thus his application went into infinite loop.

That code should be changed to use CompareInfo.IndexOf (s, "--", CompareOptions.Ordinal), but it seemed too difficult for those guys who posts there to understand why such infinite loop happen. That U+3007 is a number "zero" and on comparing strings "10000" and "1" are regarded as equivalent if those zeros are actually U+3007. I wonder if that is acceptable for Japanese accounting softwares.

Since there is no design contract for Windows collation, I have even no idea if it is a bug in Windows or this is Microsoft's culture (string collation is culture dependent matter and there is no definition).

When I was digging into CompareOptions, I was really mazed. At first I thought that IgnoreSymbols just indicates to omit characters whose unicode category is BlahBlahSymbol and IgnoreNonSpace indicates to omit NonSpacingMark characters. It is totally wrong assumption. Many characters were ignorable than I have expected. For example, Char.UnicodeCategory() returns "LetterNumber" for U+3007 above.

That was unfortunately before I got to know that CompareInfo() is based on LCMapString whose behavior comes from Windows 95. Since there was no Unicode 3.1 it is not likely to happen that it matches with what Char.GetUnicodeCategory() returns for each character. However, there are non-ignorable characters which are added at Unicode 2.0 and 3.0 stages (latter than Windows 95). They are still in the dark age.

Thus, there are several characters that are "ignored" in CompareInfo.Compare(): Sinhala, Tibetan, Myanmar, Etiopic, Cherokee, Ogham, Runic, Tagalog, Hanunoo, Philippine, Buhid, Tagbanwa, Khmer and Mongolian characters, and Yi syllables and more - such as Braille patterns.

There should be no reason not to be consistent with the other Unicode related stuff such as System.Text.UnicodeEncoding, System.Char.GetUnicodeCategory() and especially String.Normalize().

So, the ideal solution here would be to use Unicode 3.1 table (further ideal solution is to use the latest Unicode 4.1 for all the related stuff). So I would really like to push Microsoft to discard existing CompareInfo (leave it as is) and add collation support in an alternative class (or inside CharUnicodeInfo or whatever). Sadly it is impossible just to upgrade Unicode version used in LCMapString, keeping it compatible. It is because of some inconsistent character categories (well, anyways .NET 2.0 upgraded the source of UnicodeCategory thus GetUnicodeCategory() returns inconsistent values for some characters though) and a design failure in Windows sortkey table (I'll describle more about it maybe next time).

Train of Sort #2 - decent, native oriented

Since I have actually written nothing yesterday, this is the first body of this Train of Sort series.

I think Windows collation table is designed rather carefully than UCA DUCET. On looking into blocks (U+2580 to U+259F) I noticed that many of them have the identical primary weight (though I guess U+25EF is incorrect). Thus some of them are equivalent when you pass CompareOptions.IgnoreNonSpace to those collation methods in CompareInfo. Another example, non-JIS CJK square characters are sorted in their Alphabetical names (though I doubt U+33C3).

Similarly, Windows collation is rather native oriented than UCA. I agree with Michael Kaplan on that statement (it is written in his entry I linked yesterday).

However, it is not always successful. Windows is not always well-designed for native speakers.

What I can immediately tell is about Japanese: Windows treats Japanese vertical repetition mark (U+3021 and U+3022) as to repeat just previous one character. That is totally wrong and none of native Japanese will interpret that mark to work like that. It is used to repeat two or more characters and that depends on context. For example, "U+304B U+308F U+308B U+3022" is "kawaru-gawaru" should be equivalent to U+304B U+308F U+308B U+304C U+308F U+308B in Hiragana. It should not be U+304B U+308F U+308B U+308B U+3099. Since the behavior of those marks is unpredictable (we can't determine how many characters are repeated), it is impossible to support U+3021 and U+3022 as repetition.

Similarly some Japanese people use U+3005 to repeat more than one character (I think this might be somewhat old way or incorrect, but that repeater is anyways somewhat older one and it is notable that it is Japanese that use this character in such ways).

They are not in part of UCA and all remaining essense of Japanese sorting (like dash mark interpretation) is common. Windows is too "aggressive" to support native languages than modest Unicode Standards do.

Well, Windows is not always worse than UCA. In Unicode NFKD, U+309B (full-width voice mark) is decomposed to U+0020 U+3099. Since every Kana character that have a voice mark is decomposed to non-voiced Kana and U+3099 (combining voice mark), they can never be regarded as equivalent (since there is extraneous U+0020). Since almost all Japanese IM puts U+309B instead of U+3099 for full-width voice mark, this NFD mapping totally does not make sense with related to collation. It's just a mapping failure.

System.Xml code is not mine

I came across M.David Peterson thinking that I have written all xml code. It is quite incorrect ;-)

- System.Xml - XmlReader and XmlDocument related stuff are originally from Jason Diamond. XmlWriter related stuff are from Kral Ferch.

- System.Xml.Schema - Dwivedi, Ajay kumar wrote non-compilation part of them.

- System.Xml.Serialization - Lluis Sanchez rules.

- System.Xml.XPath - Piers Haken implemented most of our XPath engine. After that, Ben Maurer restructured the design for XSLT. XPathNavigator is Jason Diamond's work.

- System.Xml.Xsl - It is Ben Maurer who wrote most of the code.

Well, yes, I wrote most of other part than listed above (what remains ? ;-) I am just a grave keeper. And recently Andrew Skiba from Mainsoft is becoming another code hero in sys.xml area, contributing decent test framework and bugfixes in XML parsers and XSLT.

Train of Sort

The best approach to interoperability is to focus on getting widespread, conformant implementation of the XSLT 2.0 specification.

I just quoted from Microsoft's statement on XML Schema. Mhm, am so dazzled that I might have mistyped.

Actually it is quite wrong. There are some bugs in XML Schema specification e.g. undefined but significant order that happens with a combination of complex type extensions and substitution groups. None of XML schema implementation can be consistent with broken specification.

Train of Sort #1 - introduction

I have been working on our own text string collation engine that works like Windows. In other words I am working on our own System.Globalization.CompareInfo. Yesterday I posted the first working patch which is however highly unstable.

We used to use ICU for our collation engine, and currently it is disabled by default. Sadly there were some problems between our need and ICU. Basically ICU is for Unicode Collation Algorithm (UCA). Windows collation history started earlier than that of UCA, so it has its own way for string collation.

Since Windows collation is older, it has several problems that UCA does not have. Michael Kaplan, the leading Windows I18N developer (if you are interested in I18N his blog is kinda must read), once implied that Windows collation does better than UCA (he did not assert that Windows is better, so the statement is not incorrect). When I read that, I thought it sounds true. UCA - precisely, Default Unicode Collation Element Table (DUCET) - looks less related to native text collation (especially when it just depends on codepoint order in Unicode). However, on digging into Windows collation, I realized that it is not true. So here I start to describle the alternative side of Windows collation for a few days.

.NET developers want inconsistent schema validity on xml:* attributes?

Norman Walsh pointed out XInclude inconsistence against grammar definition languages. Unlike xml:id I pointed out nearly a few months ago, I believe XInclude, the new spec, is wrong here.

Why xml:* attributes should be specified in schemas you use? Because sometimes we want to restrict the value of those attributes e.g. limit "en" and "ja" in my local Japanese application. Similarly we might want to reject xml:base so that our simple stylesheet don't copy those attributes into results blindly, or processor don't read files from unexpected location. It is applications' matter.

Am disappointed at the fact that there are so many .NET developers saying that the processor should be able to accept xml:* attributes blindly. Do they want schema documents inconsistent between the processors?

I don't believe we should make XML Schema sucks just to save XInclude which is anyways borking against other schema languages.

XsltSettings.EnableDocumentFunction

This is a short followup for XslCompiledTransform. It accepts a new optional argument XsltSettings. It is an enum that holds two switches:

- EnableDocumentFunction

- EnableScript

There is a reason why document("") could be optional. When document() function receives an empty string argument, it returns a node-set that contains the stylesheet itself. That however means, XSLT engine must preserve reference to the input stylesheet document (XPathNavigator). There was a bug report to MS feedback that document() could not be resolved (I once refered to it). So this options looks like Microsoft's response to developers' need.

I thought that not keeping source document is a good idea, so I tried to remove all the references to XPathNavigator clones in our XslTransform (except for document() function). After some hacking, I wrote a simple test which assures that XslTransform does not have reference to the document:

So I tried it under Windows:

$ csc test.cs /nologo /nowarn:618 $ mono test.exe load : False transform : False $ ./test load : True transform : False

Hmm... I wonder if I missed something.

NvdlValidatingReader

Recently I put another XML validator in Mono - NvdlValidatingReader. It implements NVDL, ISO DSDL part 4 Namespace-based Validation Dispatching Language.

It is in our Commons.Xml.Relaxng.dll. I temporarily put autogenerated ndoc documents (no descriptive documents there). It will be included in the next release of Mono. For now, I haven't prepared independent archive, so get the sources from mono SVN repositry. Compiled binary should be in the latest monocharge.

Well, for those who want instant dll, you can get it from here:Commons.Xml.Relaxng.dll.

There is also a set of example code which demonstrates validation: nvdltests.zip

I'm too lazy to write something new, so here am mostly copying the description below from mcs/class/Commons.Xml.Relaxng/README.

NVDL

NvdlValidatingReader is an implementation of ISO DSDL Part 4 Namespace-based Validation Dispatching Language (NVDL). Note that the development is still ongoing, and NVDL specification itself is also still not in standard status as yet.

NOTE: It is "just started" implementation and may have limitations and problems.

By default, NvdlValidatingReader supports RELAX NG, RELAX NG Compact syntax, W3C XML Schema and built-in NVDL validations, however without "PlanAtt" support.

Usage

Using built-in RELAX NG support.

static NvdlReader.Read() method reads argument XmlReader and return NvdlRules instance.

NvdlValidatingReader is instantiated from a) XmlReader to be validated, and b) NvdlRules as validating NVDL script.

Custom validation support

NvdlConfig is here used to support "custom validation provider". In NVDL script, there could be any schema language referenced. I'll describe what validation provider is immediately later.

[*1] Of course Schematron should receive its input as XPathNavigator or IXPathNavigable, but we could still use ReadSubtree() in .NET 2.0. NvdlValidationProvider

NvdlValidationProvider

To support your own validation language, you have to design your own extension to NvdlValidationProdiver type.

Abstract NvdlValidationProvider should implement at least one of the virtual methods below:

- CreateValidatorGenerator (NvdlValidate validate, string schemaType, NvdlConfig config)

- CreateValidatorGenerator (XmlReader schema, NvdlConfig config)

Each of them returns NvdlValidatorGenerator implementation (will describe later).

The first one receives MIME type (schemaType) and "validate" NVDL element. If you don't override it, it treats only "*/*-xml" and thus creates XmlReader from either schema attribute or schema element and passes it to another CreateValidatorGenerator() overload.

If this (possibly overriden) method returns null, then this validation

provider does not support the MIME type or the schema document.

The second one is a shorthand method to handle "*/*-xml". By default it just returns null.

Most of validation providers will only have to override the second overload. Few providers such as RELAX NG Compact Syntax support will have to overide the first overload.

NvdlValidatorGenerator

Abstract NvdlValidatorGenerator.CreateValidator() method is designed to create XmlReader from input XmlReader.

For example, we have NvdlXsdValidatorGenerator class. It internally uses XmlValidatingReader which takes XmlReader as its constructor parameter.

An instance of NvdlValidatorGenerator will be created for each "validate" element in the NVDL script. When the validate element applies (for a PlanElem), it creates validator XmlReader.

XslCompiledTransform

Happy new year. (I was totally hibernating this winter ;-)

Recently Microsoft pushed another CTP version of Whidbey and I found there is a new XSLT implementation named XslCompiledTransform (BTW MSDN documentation are so obsolete that it still contains XsltCommand and XQueryCommand). It is in System.Data.SqlXml.dll, and (as long as I see Object Browser) there is no other type than XslCompiledTransform related things. (As compared to the assembly file name, it is somewhat funky.)

XslCompiledTransform looks coming from XsltCommand which is based on executable stylesheet IL code like Apache XSLTC. I wonder how many existing types such as XPathExpression and XPathNodeIterator are used in this new implementation. They might exist just for historical extension support.